Researchers populated a tiny virtual town with AI (and it was very wholesome)

What would happen if you filled a virtual town with AIs and set them loose? As it turns out, they brush their teeth and are very nice to one another! But this unexciting outcome is good news for the researchers who did it, since they wanted to produce “believable simulacra of human behavior” and got just that.

The paper describing the experiment, by Stanford and Google researchers, has not been peer reviewed or accepted for publication anywhere, but it makes for interesting reading nonetheless. The idea was to see if they could apply the latest advances in machine learning models to produce “generative agents” that take in their circumstances and output a realistic action in response.

And that’s very much what they got. But before you get taken in by the cute imagery and descriptions of reflection, conversation and interaction, let’s make sure you understand that what’s happening here is more like an improv troupe role-playing on a MUD than any kind of proto-Skynet. (Only millennials will understand the preceding sentence.)

These little characters aren’t quite what they appear to be. The graphics are just a visual representation of what is essentially a bunch of conversations between multiple instances of ChatGPT. The agents don’t walk up, down, left and right or approach a cabinet to interact with it. All this is happening through a complex and hidden text layer that synthesizes and organizes the information pertaining to each agent.

Twenty-five agents, 25 instances of ChatGPT, each prompted with similarly formatted information that causes it to play the role of a person in a fictional town. Here’s how one such person, John Lin, is set up:

John Lin is a pharmacy shopkeeper at the Willow Market and Pharmacy who loves to help people. He is always looking for ways to make the process of getting medication easier for his customers; John Lin is living with his wife, Mei Lin, who is a college professor, and son, Eddy Lin, who is a student studying music theory; John Lin loves his family very much; John Lin has known the old couple next-door, Sam Moore and Jennifer Moore, for a few years; John Lin thinks Sam Moore is a kind and nice man…

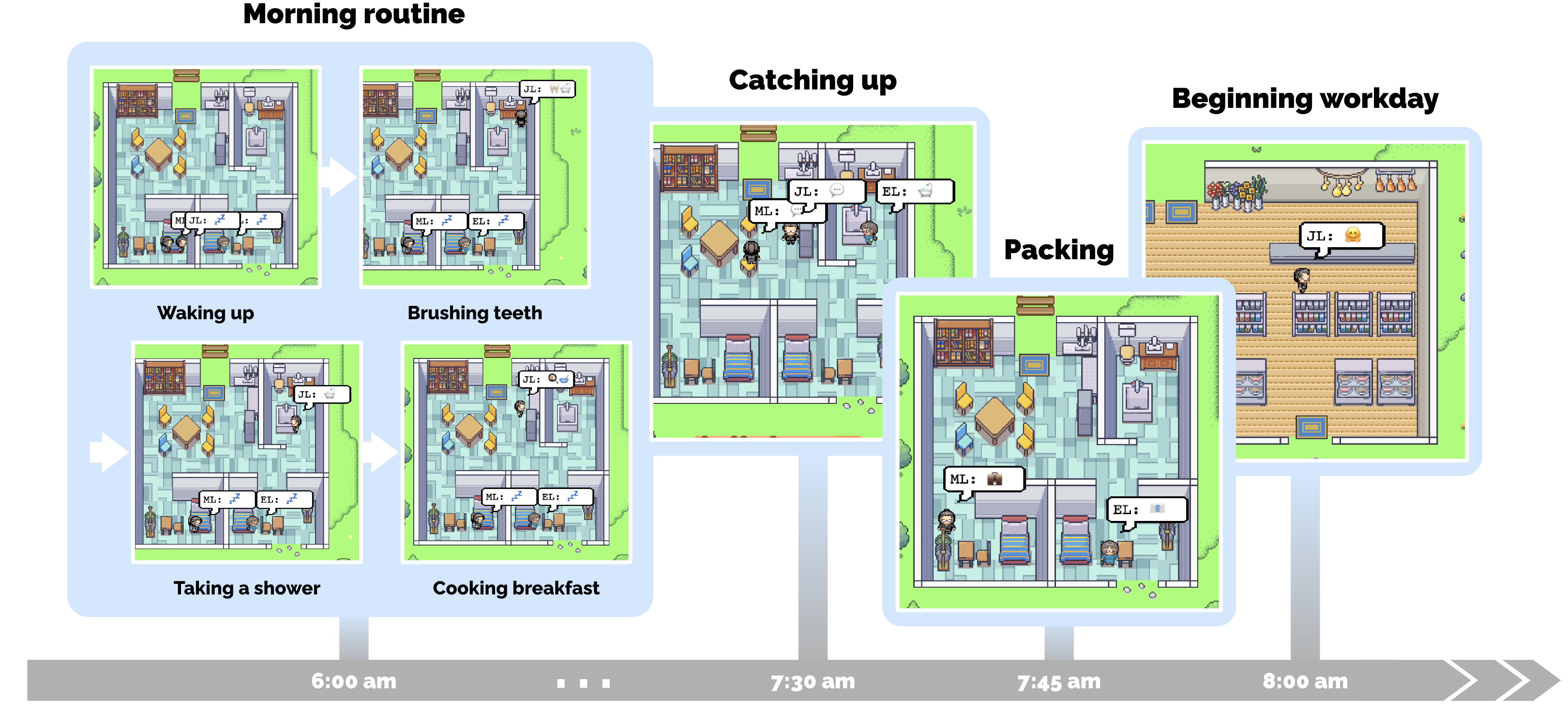

With that information, the agents are then asked to come up with their next actions given the time and circumstances. For instance, they might tell the John agent that it is 8 AM and he just woke up. What does he do? Well, he brushes his teeth, kisses his wife (hopefully in that order), gets dressed, then goes to the kitchen.

Meanwhile, another, totally independent ChatGPT instance representing John’s son Eddy has also been prompted with its own information. It too gets up, brushes its teeth, then goes to the kitchen.

And now the overarching structure of the experiment steps in: The agents representing John and Eddy aren’t both “in” the same virtual space or anything. Instead, when John has finished getting dressed and says he will move to the kitchen, the experimental framework informs him that his son Eddy is there, because in its own instance, Eddy has decided to move into the kitchen at an overlapping time in the experiment-level “day,” based on an estimate of how long various actions take.

Since they’re both in the room at the same time, the experimental setup informs them that the other person is there — along with information like that there is a table no one is sitting at, the stove is on, etc. What do they do next?

John: Good morning Eddy. Did you sleep well?

Eddy: Good morning dad. Yeah, I slept great.

John: That’s good. What are you working on today?

Eddy: I’m working on a new music composition for my class. It’s due this week, so I’m trying to get it finished. But I’m having so much fun with it!

John: That sounds great!

What a nice, normal interaction between a parent and child. Then Mei comes in and they have a pleasant interaction as well. And it’s all accomplished by asking totally separate chatbots what they would do if, in a fictional town where it is this person and had a family like this and was in a room at this time, where this was happening… what would “they,” a real human, do?

So ChatGPT guesses at what a person would do in that situation, and gives their answer as if they’re playing a text adventure. If you then told it, “it is pitch dark, you are likely to be eaten by a grue,” it would probably say it lights a torch. But instead, the experiment has the characters continue with their day minute by minute, buying groceries, walking in the park and going to work.

Image Credits: Google / Stanford University

The users can also write in events and circumstances, like a dripping faucet or a desire to plan a party, and the agents respond appropriately, since any text, for them, is reality.

All of this is performed by laboriously prompting all these instances of ChatGPT with all the minutiae of the agent’s immediate circumstances. Here’s a prompt for John when he runs into Eddy later:

It is February 13, 2023, 4:56 pm.

John Lin’s status: John is back home early from work.

Observation: John saw Eddy taking a short walk around his workplace.

Summary of relevant context from John’s memory:

Eddy Lin is John’s Lin’s son. Eddy Lin has been working on a music composition for his class. Eddy Lin likes to walk around the garden when he is thinking about or listening to music.

John is asking Eddy about his music composition project. What would he say to Eddy?[Answer:] Hey Eddy, how’s the music composition project for your class coming along?

The instances would quickly begin to forget important things, since the process is so longwinded, so the experimental framework sits on top of the simulation and reminds them of important things or synthesizes them into more portable pieces.

For instance, after the agent is told about a situation in the park, where someone is sitting on a bench and having a conversation with another agent, but there is also grass and context and one empty seat at the bench… none of which are important. What is important? From all those observations, which may make up pages of text for the agent, you might get the “reflection” that “Eddie and Fran are friends because I saw them together at the park.” That gets entered in the agent’s long-term “memory” — a bunch of stuff stored outside the ChatGPT conversation — and the rest can be forgotten.

So, what does all this rigmarole add up to? Something less than true generative agents as proposed by the paper, to be sure, but also an extremely compelling early attempt to create them. Dwarf Fortress does the same thing, of course, but by hand-coding every possibility. That doesn’t scale well!

It was not obvious that a large language model like ChatGPT would respond well to this kind of treatment. After all, it wasn’t designed to imitate arbitrary fictional characters long term or speculate on the most mind-numbing details of a person’s day. But handled correctly — and with a fair amount of massaging — not only can one agent do so, but they don’t break when you use them as pieces in a sort of virtual diorama.

This has potentially huge implications for simulations of human interactions, wherever those may be relevant — of course in games and virtual environments they’re important, but this approach is still monstrously impractical for that. What matters though is not that it is something everyone can use or play with (though it will be soon, I have no doubt), but that the system works at all. We have seen that in AI: If it can do something poorly, the fact that it can do it at all generally means it’s only a matter of time before it does it well.

You can read the full paper, “Generative Agents: Interactive Simulacra of Human Behavior,” here.