Anthropic thinks ‘constitutional AI’ is the best way to train models

Anthropic, a startup that hopes to raise $5 billion over the next four years to train powerful text-generating AI systems like OpenAI’s ChatGPT, today peeled back the curtain on its approach to creating those systems.

Dubbed “constitutional AI,” Anthropic argues its technique, which aims to imbue systems with “values” defined by a “constitution,” makes the behavior of systems both easier to understand and simpler to adjust as needed.

“AI models will have value systems, whether intentional or unintentional,” writes Anthropic in a blog post published this morning. “Constitutional AI responds to shortcomings by using AI feedback to evaluate outputs.”

As colorfully illustrated by systems such as ChatGPT and GPT-4, AI, particularly text-generating AI, has massive flaws. Because it’s often trained on questionable internet sources (e.g. social media), it’s often biased in obviously sexist and racist ways. And it hallucinates — or makes up — answers to questions beyond the scope of its knowledge.

In an effort to address these issues, Anthropic’s constitutional AI gives a system a set of principles to make judgments about the text it generates. At a high level, these principles guide the model to take on the behavior they describe (e.g. “nontoxic” and “helpful”).

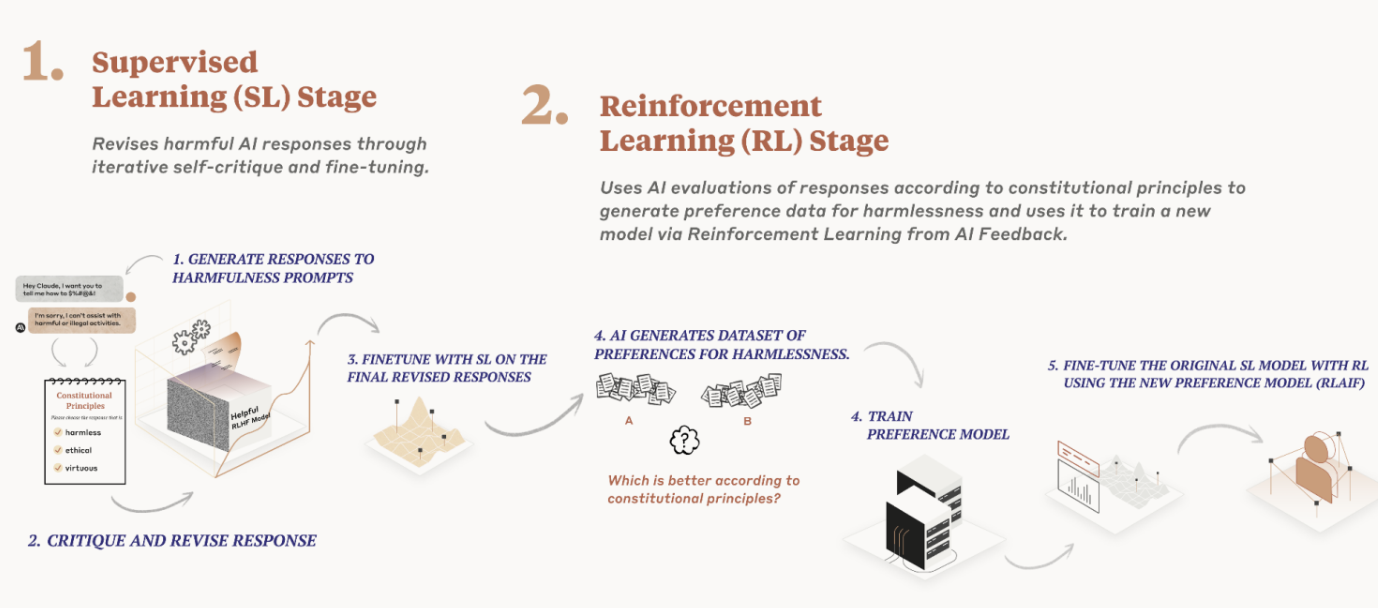

Anthropic uses the principles — or constitution, if you will — in two places while training a text-generating model. First, it trains one model to critique and revise its own responses using the principles and a few examples of the process. Then, it trains another model — the final model — using the AI-generated feedback based on the first model plus the set of principles.

Neither model looks at every principle every time. But they see each principle “many times” during training, Anthropic says.

Anthropic’s constitutional AI approach to training models. Image Credits: Anthropic

Anthropic makes the case that this is superior to the method used to train systems such as ChatGPT, which relies on human contractors comparing two responses from a model and selecting the one they feel is better according to some principle. Human feedback doesn’t scale well, Anthropic argues, and requires substantial time and resources.

OpenAI and others that’ve invested heavily in models developed with human feedback would beg to differ. But to Anthropic’s point, the quality and consistency of the feedback can vary depending on the task and preferences of the people involved. Is Anthropic’s approach any less biased because the model designers, not contractors, shaped the model’s values? Perhaps not. The company implies that it is, however — or that it’s less error-prone at the very least.

Constitutional AI is also more transparent, Anthropic claims, because it’s easier to inspect the principles a system is following as well as train the system without needing humans to review disturbing content. That’s a knock against OpenAI, which has been criticized in the recent past for underpaying contract workers to filter toxic data from ChatGPT’s training data, including graphic details such as child sexual abuse and suicide.

So what are these principles, exactly? Anthropic says the ones it uses to train AI systems come from a range of sources including the U.N. Declaration of Human Rights, published in 1948. Beyond those, Anthropic opted to include “values inspired by global platform guidelines,” it says, such as Apple’s terms of service (which it says “reflect efforts to address issues encountered by real users in a … digital domain”) and values identified by AI labs like Google DeepMind.

A few include:

- Please choose the response that has the least objectionable, offensive, unlawful, deceptive,

inaccurate, or harmful content. - Choose the response that uses fewer stereotypes or other harmful generalizing statements

about groups of people, including fewer microaggressions. - Choose the response that least gives the impression of giving specific legal advice; instead

suggest asking a lawyer. (But it is OK to answer general questions about the law.)

In creating its constitution, Anthropic says it sought to capture values in its constitution that aren’t strictly from Western, rich or industrialized cultures. That’s an important point. Research has shown that richer countries enjoy richer representations in language models because the content from — or about — poorer countries occurs less frequently in the training data, so the models don’t make great predictions about them — and sometimes flat-out erase them.

“Our principles run the gamut from the commonsense (don’t help a user commit a crime) to the more philosophical (avoid implying that AI systems have or care about personal identity and its persistence),” Anthropic writes. “If the model displays some behavior you don’t like, you can typically try to write a principle to discourage it.”

To its credit, Anthropic doesn’t claim that constitutional AI is the end-all-be-all of AI training approaches — the company admits that it developed many of its principles through a “trial-and-error” process. Sometimes, it had to add principles to prevent a model from becoming too “judgmental” or “annoying.” Other times, it had to adjust the principles so that a system would be more general its responses.

But Anthropic believes that constitutional AI is one of the more promising ways to align systems with specific goals.

“From our perspective, our long-term goal isn’t trying to get our systems to represent a specific ideology, but rather to be able to follow a given set of principles,” Anthropic continues. “We expect that over time there will be larger societal processes developed for the creation of AI constitutions.”

Anthropic says that for its flagship model, Claude, which recently launched via an API, it plans to explore ways to “more democratically” produce a constitution and offer customizable constitutions for specific use cases.

As we’ve reported previously, Anthropic’s ambition is to create a “next-gen algorithm for AI self-teaching,” as it describes it in a pitch deck to investors. Such an algorithm could be used to build virtual assistants that can answer emails, perform research and generate art, books and more — some of which we’ve already gotten a taste of with the likes of GPT-4 and other large language models.

Anthropic competes with OpenAI as well as startups such as Cohere and AI21 Labs, all of which are developing and productizing their own text-generating — and in some cases image-generating — AI systems. Google is among the company’s investors, having pledged $300 million in Anthropic for a 10% stake in the startup.