What Sam Altman said about AI at a CEO summit the day before OpenAI ousted him as CEO

Sam Altman is out as CEO of OpenAI after a “boardroom coup” on Friday that shook the tech industry. Some are likening his ouster to Steve Jobs being fired at Apple, a sign of how momentous the shakeup feels amid an AI boom that has rejuvenated Silicon Valley.

Altman, of course, had much to do with that boom, caused by OpenAI’s release of ChatGPT to the public late last year. Since then, he’s crisscrossed the globe talking to world leaders about the promise and perils of artificial intelligence. Indeed, for many he’s become the face of AI.

Where exactly things go from here remains uncertain. In the latest twists, some reports suggest Altman could return to OpenAI and others suggest he’s already planning a new startup.

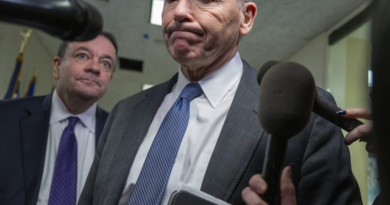

But either way, given recent events, his last appearance as OpenAI’s CEO merits attention. It occurred on Thursday at the APEC CEO summit in San Francisco. The beleaguered city, where OpenAI is based, hosted the Asia-Pacific Economic Cooperation summit this week, having first cleared away embarrassing encampments of homeless people (though it still suffered embarrassment when robbers stole a Czech news crew’s equipment).

Altman answered questions onstage from, somewhat ironically, moderator Laurene Powell Jobs, the billionaire widow of the late Apple cofounder. She asked Altman how policymakers can strike the right balance between regulating AI companies while also being open to evolving as the technology itself evolves.

Altman started by noting that he’d had dinner this summer with historian and author Yuval Noah Harari, who has issued stark warnings about the dangers of artificial intelligence to democracies, even suggesting tech executives should face 20 years in jail for letting AI bots sneakily pass as humans.

The Sapiens author, Altman said, “was very concerned, and I understand it. I really do understand why if you have not been closely tracking the field, it feels like things just went vertical…I think a lot of the world has collectively gone through a lurch this year to catch up.”

He noted that people can now talk to ChatGPT, saying it’s “like the Star Trek computer I was always promised.” The first time people use such products, he said, “it feels much more like a creature than a tool,” but eventually they get used to it and see its limitations (as some embarrassed lawyers have).

He said that while AI hold the potential to do wonderful things like cure diseases on the one had, on the other, “How do we make sure it is a tool that has proper safeguards as it gets really powerful?”

Today’s AI tools, he said, are “not that powerful,” but “people are smart and they see where it’s going. And even though we can’t quite intuit exponentials well as a species much, we can tell when something’s gonna keep going, and this is going to keep going.”

The questions, he said, are what limits on the technology will be put in place, who will decide those, and how they’ll be enforced internationally.

Grappling with those questions “has been a significant chunk of my time over the last year,” he noted, adding, “I really think the world is going to rise to the occasion and everybody wants to do the right thing.”

Today’s technology, he said, doesn’t need heavy regulation. “But at some point—when the model can do like the equivalent output of a whole company and then a whole country and then the whole world—maybe we do want some collective global supervision of that and some collective decision-making.”

For now, Altman said, it’s hard to “land that message” and not appear to be suggesting policymakers should ignore present harms. He also doesn’t want to suggest that regulators should go after AI startups or open-source models, or bless AI leaders like OpenAI with “regulatory capture.”

“We are saying, you know, ‘Trust us, this is going to get really powerful and really scary. You’ve got to regulate it later’—very difficult needle to thread through all of that.”