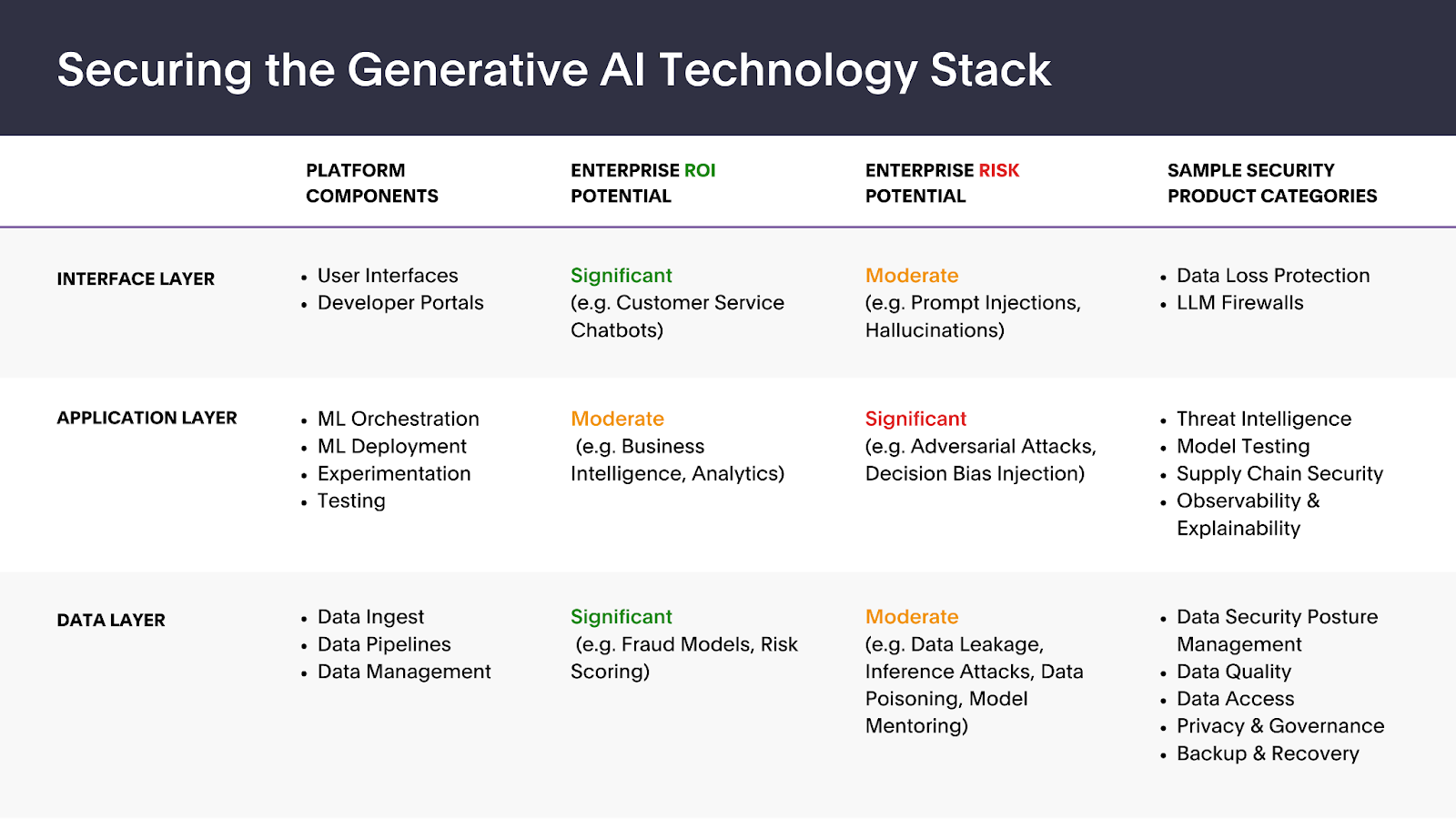

Securing generative AI across the technology stack

Research shows that by 2026, over 80% of enterprises will be leveraging generative AI models, APIs, or applications, up from less than 5% today.

This rapid adoption raises new considerations regarding cybersecurity, ethics, privacy, and risk management. Among companies using generative AI today, only 38% mitigate cybersecurity risks, and just 32% work to address model inaccuracy.

My conversations with security practitioners and entrepreneurs have concentrated on three key factors:

- Enterprise generative AI adoption brings additional complexities to security challenges, such as overprivileged access. For instance, while conventional data loss prevention tools effectively monitor and control data flows into AI applications, they often fall short with unstructured data and more nuanced factors such as ethical rules or biased content within prompts.

- Market demand for various GenAI security products is closely tied to the trade-off between ROI potential and inherent security vulnerabilities of the underlying use cases for which the applications are employed. This balance between opportunity and risk continues to evolve based on the ongoing development of AI infrastructure standards and the regulatory landscape.

- Much like traditional software, generative AI must be secured across all architecture levels, particularly the core interface, application, and data layers. Below is a snapshot of various security product categories within the technology stack, highlighting areas where security leaders perceive significant ROI and risk potential.

Image Credits: Forgepoint Capital

Widespread adoption of GenAI chatbots will prioritize the ability to accurately and quickly intercept, review, and validate inputs and corresponding outputs at scale without diminishing user experience.

Interface layer: Balancing usability with security

Businesses see immense potential in leveraging customer-facing chatbots, particularly customized models trained on industry and company-specific data. The user interface is susceptible to prompt injections, a variant of injection attacks aimed at manipulating the model’s response or behavior.

In addition, chief information security officers (CISOs) and security leaders are increasingly under pressure to enable GenAI applications within their organizations. While the consumerization of the enterprise has been an ongoing trend, the rapid and widespread adoption of technologies like ChatGPT has sparked an unprecedented, employee-led drive for their use in the workplace.

Widespread adoption of GenAI chatbots will prioritize the ability to accurately and quickly intercept, review, and validate inputs and corresponding outputs at scale without diminishing user experience. Existing data security tooling often relies on preset rules, resulting in false positives. Tools like Protect AI’s Rebuff and Harmonic Security leverage AI models to dynamically determine whether or not the data passing through a GenAI application is sensitive.