Nvidia shatters stock market record by adding $230 billion in value in one day. Here’s why it’s dominating the AI chip race

Chip designer Nvidia has emerged as the clear winner in not just the early stages of the AI boom but, at least so far, in all of stock market history. The $1.9 trillion AI giant surged to a record-high stock price on Thursday, putting it on course to add over $230 billion to its market capitalization and shatter a one-day record only weeks old: Meta’s $197 billion gain in early February.

It’s dominating the market, selling over 70% of all AI chips, and startups are desperate to spend hundreds of thousands of dollars on Nvidia’s hardware systems. Wall Street can’t get enough, either—Nvidia stock rocketed up an astonishing 15% after the company smashed its lofty earnings goals last quarter, bringing its market cap to over $1.9 trillion on top of its stock value tripling in the past year alone.

So … why? How is it that a company founded all the way back in 1993 has displaced tech titans Alphabet and Amazon, leapfrogging them to become the third-most valuable company in the world? It all comes down to Nvidia’s leading semiconductor chips for use in artificial intelligence.

The company that ‘got it’

Nvidia built up its advantage by playing the long game and investing in AI years before ChatGPT hit the market, and its chip designs are so far ahead of the competition that analysts wonder if it’s even possible for anyone else to catch up. Designers such as Arm Holdings and Intel, for instance, haven’t yet integrated hardware with AI-targeted software in the way Nvidia has.

“This is one of the great observations that we made: We realized that deep learning and AI was not [just] a chip problem … Every aspect of computing has fundamentally changed,” said Nvidia cofounder and CEO Jensen Huang at the New York Times DealBook Summit in November. “We observed and realized that about a decade and a half ago. I think a lot of people are still trying to sort that out.” Huang added that Nvidia just “got it” before anyone else did: “The reason why people say we’re practically the only company doing it is because we’re probably the only company that got it. And people are still trying to get it.”

Software has been a key part of that equation. While competitors have focused their efforts on chip design, Nvidia has aggressively pushed its CUDA programming interface that runs on top of its chips. That dual emphasis on software and hardware has made Nvidia chips the must-have tool for any developer looking to get into AI.

“Nvidia has done just a masterful job of making it easier to run on CUDA than to run on anything else,” said Edward Wilford, an analyst at tech consultancy Omdia. “CUDA is hands down the jewel in Nvidia’s crown. It’s the thing that’s gotten them this far. And I think it’s going to carry them for a while longer.”

AI needs computing power—a lot of computing power. AI chatbots such as ChatGPT are trained by inhaling vast quantities of data sourced from the internet—up to a trillion distinct pieces of information. That data is fed into a neural network that catalogs the associations between various words and phrases, which, after human training, can be used to produce responses to user queries in natural language. All those trillions of data points require huge amounts of hardware capacity, and hardware demand is only expected to increase as the AI field continues to grow. That’s put Nvidia, the sector’s biggest seller, in a great position to benefit.

Huang sounded a similar tune on his triumphant earnings call on Wednesday. Highlighting the shift from general-purpose computing to what he called “accelerated computing” at data centers, he argued that it’s “a whole new way of doing computing”—and even crowned it “a whole new industry.”

Early on the AI boom

Nvidia has been at the forefront of AI hardware from the start. When large-scale AI research from startups such as OpenAI started ramping up in the mid-2010s, Nvidia—through a mixture of luck and smart bets—was in the right place at the right time.

Nvidia had long been known for its innovative GPUs, a type of chip popular in gaming applications. Most standard computer chips, called CPUs, excel at performing complicated calculations in sequence, one at a time. But GPUs can perform many simple calculations at once, making them excellent at supporting the complex graphics processing that video games demand. As it turned out, Nvidia’s GPUs were a perfect fit for the type of computing systems AI developers needed to build and train LLMs.

“To some extent, you could say they’ve been extremely lucky. But I think that diminishes it—they have capitalized perfectly on every instance of luck, on every opportunity they were given,” said Wilford. “If you go back five or 10 years, you see this ramp-up in console gaming. They rode that, and then when they felt that wave cresting, they got into cryptocurrency mining, and they rode that. And then just as that wave crested, AI started to take off.”

In fact, Nvidia had been quietly developing AI-targeted hardware for years. As far back as 2012, Nvidia chips were the technical foundation of AlexNet, the groundbreaking early neural network developed in part by OpenAI cofounder and former chief scientist Ilya Sutskever, who recently left the nonprofit after trying to oust CEO Sam Altman. That first mover advantage has given Nvidia a big leg up over its competitors.

“They were visionaries … For Jensen, that goes back to his days at Stanford,” said Wilford. “He’s been waiting for this opportunity the whole time. And he’s kept Nvidia in a position to jump on it whenever the chance came. What we’ve seen in the last few years is that strategy executed to perfection. I can’t imagine someone doing better with it than Nvidia has.”

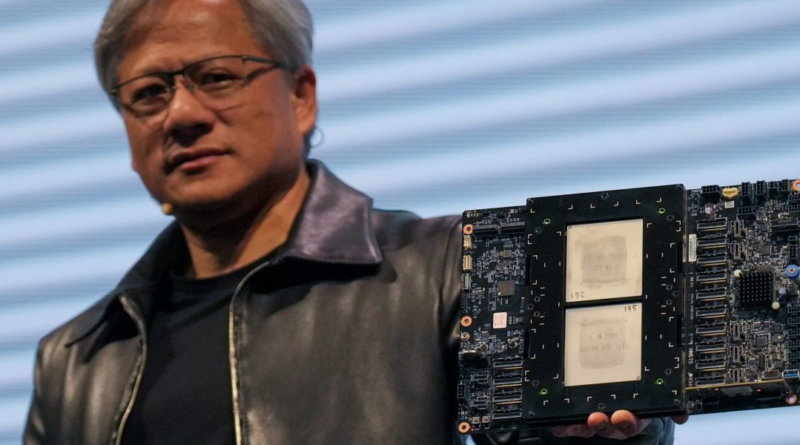

Since its early AI investments over a decade ago, Nvidia has poured millions into a hugely profitable AI hardware business. The company sells its flagship Hopper GPU for a quarter of a million dollars per unit. It’s a 70-pound supercomputer, built from 35,000 individual pieces—and the waiting list for customers to get their hands on one is months long. Desperate AI developers are turning to organizations like the San Francisco Compute Group, which rents out computing power by the hour from its collection of Nvidia chips. (As of this article’s publication, they’re booked out for almost a month.)

Nvidia’s AI chip juggernaut is poised to grow even more if AI growth meets analysts’ expectations.

“Nvidia delivered against what was seemingly a very high bar,” wrote Goldman Sachs in its Nvidia earnings analysis. “We expect not only sustained growth in gen AI infrastructure spending by the large CSPs [communications service providers] and consumer internet companies, but also increased development and adoption of AI across enterprise customers representing various industry verticals and, increasingly, sovereign states.”

There are some potential threats to Nvidia’s market domination. For one, investors noted in the company’s most recent earnings that restrictions on exports to China dinged business, and a potential increase in competition from Chinese chip designers could put pressure on Nvidia’s global market share. Nvidia is also dependent on Taiwanese chip foundry TSMC to actually manufacture many of the chips it designs. The Biden administration has been pushing for more investment in domestic manufacturing through the CHIPS Act, but Huang himself said it will be at least a decade before American foundries could be fully operational.

“[Nvidia is] highly dependent on TSMC in Taiwan, and there are regional complications [associated with that], there are political complications,” said Wilford. “[And] the Chinese government is investing very heavily in developing their own AI capabilities as a result of some of those same tensions.”