Sentry’s AI-powered Autofix helps developers quickly debug and fix their production code

Sentry has long helped developers monitor and debug their production code. Now, the company is adding some AI smarts to this process by launching AI Autofix, a new feature that uses all of the contextual data Sentry has about a company’s production environment to suggest fixes whenever an error occurs. While it’s called Autofix, this isn’t a completely automated system, something very few developers would be comfortable with. Instead, it is a human-in-the-loop tool that is “like having a junior developer ready to help on-demand,” as the company explains.

“Rather than thinking about the performance of your application — or your errors — from a system infrastructure perspective, we’re really trying to focus on evaluating it and helping you solve problems from a code-level perspective,” Sentry engineering manager Tillman Elser explained when I asked him how this new feature fits into the company’s overall product lineup.

Elser argued that many other AI-based coding tools are great for auto-completing code in the IDE, but since they don’t know about a company’s production environment, they can’t proactively look for issues. Autofix’s main value proposition, he explained, is that it can help developers speed up the process of triaging and resolving errors in production because it knows about the context the code is running in. “We’re trying to solve problems in production as fast as possible. We’re not trying to make you a faster developer when you’re building your application,” he said.

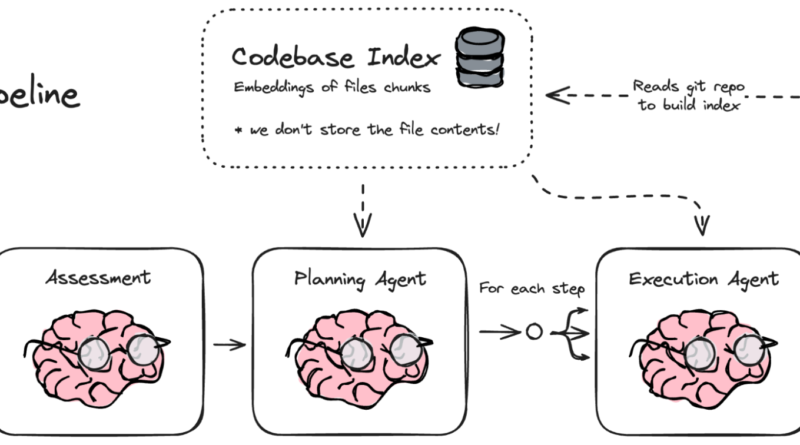

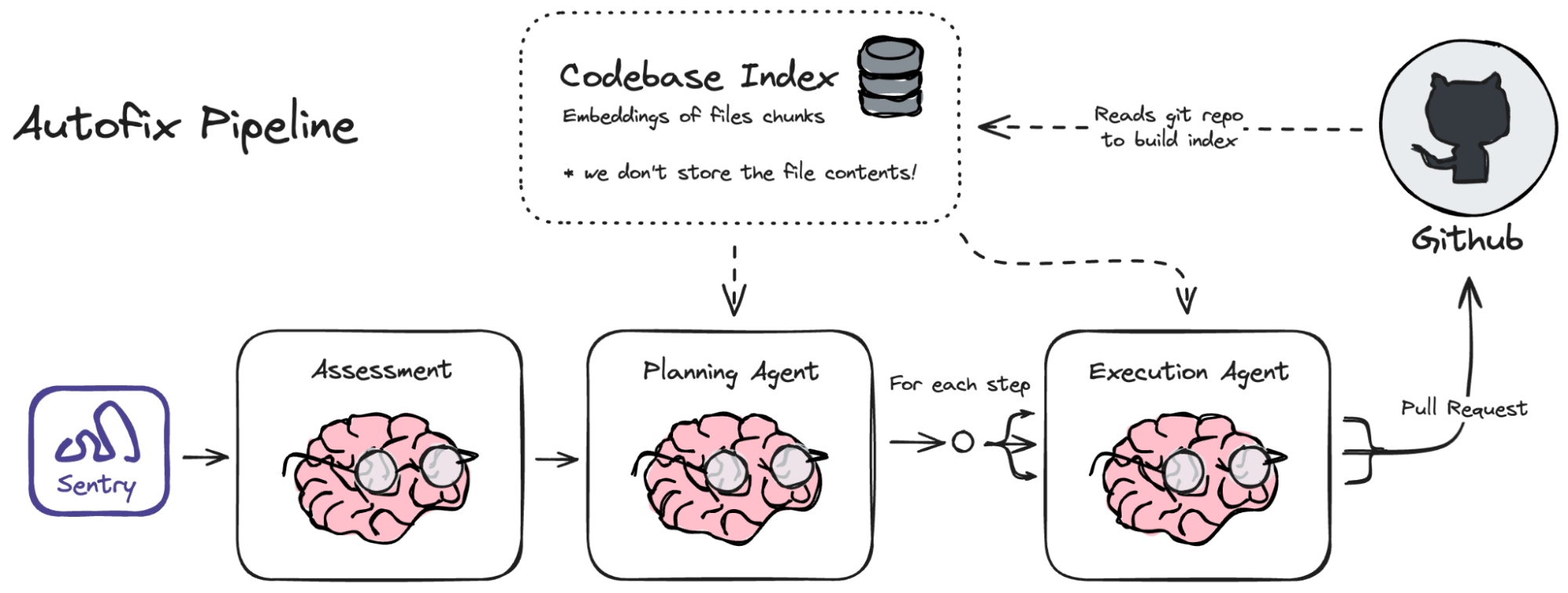

Using an agent-based architecture, Autofix will keep an eye out for errors and then use its discovery agent to see if a code change could fix that error — and if not, it will provide a reason. What’s important here is that developers remain in the loop at all times. One nifty feature here, for example, is that they can add some additional context for the AI agents if they already have some idea of what the problem may be. Or they can opt to hit the “gimme fix” button and see what the AI comes up with.

The AI will then go through a few steps to assess the issue and create an action plan to fix it. In the process, Autofix will provide developers with a diff that explains the changes and then, if everything looks good, create a pull request to merge those changes.

Autofix supports all major languages, though Elser acknowledged that the team did most of its testing with JavaScript and Python code. Obviously, it won’t always get things right. There is a reason Sentry likens it to a junior developer, after all. The most straightforward failure case, though, Elser told me, is when the AI simply doesn’t have enough context — maybe because the team hasn’t set up enough instrumentation to gather the necessary data for Autofix to work with, for example.

One thing to note here is that while Sentry is looking at building its own models, it is currently working with third-party models from the likes of OpenAI and Anthropic. That also means that users must opt in to send their data to these third-party services to use Autofix. Elser said that the company plans to revisit this in the future and maybe offer an in-house LLM that is fine-tuned on its data.