Thoras.ai automates resource allocation for Kubernetes workloads

When the Soviet Union invaded Afghanistan in 1979, Thoras.AI founders Nilo Rahmani and Jennifer Rahmani weren’t even a twinkle in their parents’ eyes, but their parents were forced to flee along with their older siblings. Eventually they ended up immigrating to the U.S. and settling in northern Virginia, where they gave birth to twin girls, who would both grow up to become engineers and work for Slack and the DoD respectively, helping implement cloud native solutions.

In their previous jobs, the Rahmani sisters recognized a problem with how engineers sourced Kubernetes workloads, relying too much on intuition and not enough on data, and having inherited some of their parents’ bravery, decided to leave their comfortable jobs and launch Thoras.ai to solve the problem.

Today, the company announced a $1.5 million pre-seed investment.

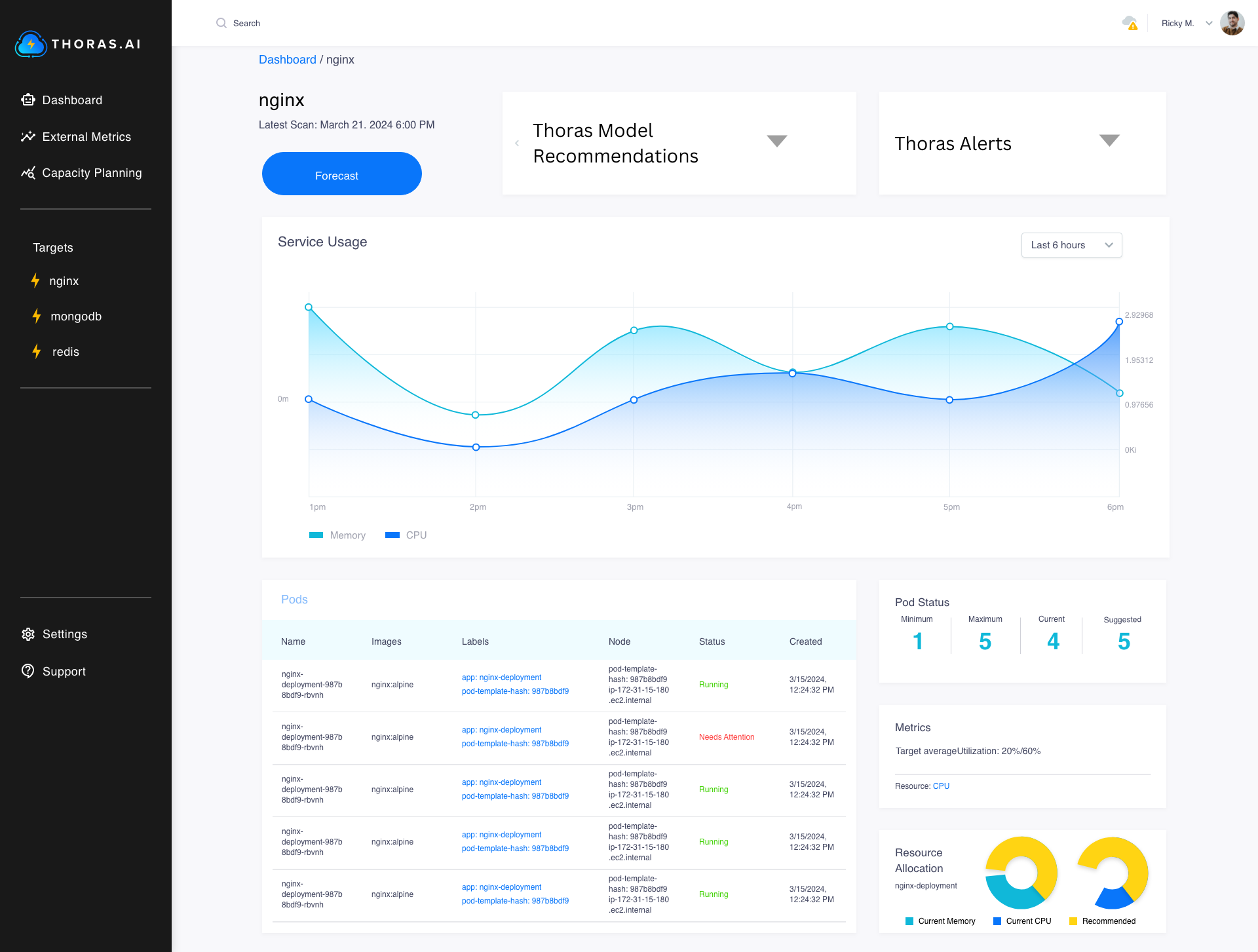

“Thoras essentially integrates alongside a cloud-based service and it consistently monitors the usage of that service,” company CEO Nilo Rahmani told TechCrunch. “So the goal is to not only forecast demand, but then to autonomously scale the application up or down in anticipation of increased traffic or decreased traffic”. It also has the ability to notify an engineer of a performance issue with the goal that they understand that there’s a problem before it blows up into something more serious.

They launched the company right after the first of the year and closed their pre-seed funding just a few weeks ago. They have already released the first version of the product and are working in live customer environments and generating revenue, all positive signs for an early stage startup like this one.

While the founders didn’t want to get into too much detail about what’s happening on the back-end, the application connects directly to the company’s development environment with no APIs involved, and no information traveling back and forth, as security and privacy was a key design factor for them. Developers see a dashboard with key information about the application’s resources, and she says they spent a lot of time making sure they provided a visually appealing user experience in the dashboard.

Image Credits: Thoras.AI

In terms of AI, the company currently uses more task-based machine learning than generative AI and large language models (LLMs). “A lot of the problems that we’re facing are systemic issues, and there are a lot of numbers involved. And so traditional machine learning and AI can be used to forecast what consumption looks like,” she said. That doesn’t mean they don’t foresee using LLMs down the road, but for now they want to be more proactive looking for potential problems. They see LLMs being more useful in troubleshooting after the fact at some point as they fill out the product.

“We definitely have products in our roadmap that make use of LLMs, but natural language processing is super helpful in a situation where there’s a lot of words involved, and right now, we want to get to the the root of the problem before it actually occurs instead of just going through logs to figure out what happened and why it happened after the fact,” she said.

They both certainly recognize that if their parents had stayed in Afghanistan, they might not have had the same educational opportunities, never mind the ability to start their own business. “There isn’t a day that I don’t think about how privileged I am to be in a country where I can pursue my dreams. I talk about that all the time,” Nilo said. Jennifer added, “It definitely helps drive us to work as hard as we can and succeed, I would say.”

Today’s pre-seed investment was co-led by Storytime Capital and Focal VC with participation from Hustle Fund, Precursor Ventures, the Pitch Fund and several unnamed strategic angel investors.