This Week in AI: OpenAI and publishers are partners of convenience

Keeping up with an industry as fast-moving as AI is a tall order. So until an AI can do it for you, here’s a handy roundup of recent stories in the world of machine learning, along with notable research and experiments we didn’t cover on their own.

By the way, TechCrunch plans to launch an AI newsletter soon. Stay tuned. In the meantime, we’re upping the cadence of our semiregular AI column, which was previously twice a month (or so), to weekly — so be on the lookout for more editions.

This week in AI, OpenAI announced that it reached a deal with News Corp, the new publishing giant, to train OpenAI-developed generative AI models on articles from News Corp brands including The Wall Street Journal, Financial Times and MarketWatch. The agreement, which the companies describe as “multi-year” and “historic,” also gives OpenAI the right to display News Corp mastheads within apps like ChatGPT in response to certain questions — presumably in cases where the answers are sourced partly or in whole from News Corp publications.

Sounds like a win for both parties, no? News Corp gets an infusion of cash for its content — over $250 million, reportedly — at a time when the media industry’s outlook is even grimmer than usual. (Generative AI hasn’t helped matters, threatening to greatly reduce publications’ referral traffic.) Meanwhile, OpenAI, which is battling copyright holders on a number of fronts over fair use disputes, has one fewer costly court battle to worry about.

But the devil’s in the details. Note that the News Corp deal has an end date — as do all of OpenAI’s content licensing deals.

That in and of itself isn’t bad-faith on OpenAI’s part. Licensing in perpetuity is a rarity in media, given the motivations of all parties involved to keep the door open to renegotiating the deal. However, it is a bit suspect in light of OpenAI CEO Sam Altman’s recent comments on the dwindling importance of AI model training data.

In an appearance on the “All-In” podcast, Altman said that he “definitely [doesn’t] think there will be an arms race for [training] data” because “when models get smart enough, at some point, it shouldn’t be about more data — at least not for training.” Elsewhere, he told MIT Technology Review’s James O’Donnell that he’s “optimistic” that OpenAI — and/or the broader AI industry — will “figure a way out of [needing] more and more training data.”

Models aren’t that “smart” yet, leading OpenAI to reportedly experiment with synthetic training data and scour the far reaches of the web — and YouTube — for organic sources. But let’s assume they one day don’t need much additional data to improve by leaps and bounds. Where does that leave publishers, particularly once OpenAI’s scraped their entire archives?

The point I’m getting at is that publishers — and the other content owners with whom OpenAI’s worked — appear to be short-term partners of convenience, not much more. Through licensing deals, OpenAI effectively neutralizes a legal threat — at least until the courts determine how fair use applies in the context of AI training — and gets to celebrate a PR win. Publishers get much-needed capital. And the work on AI that might gravely harm those publishers continues.

Here are some other AI stories of note from the past few days:

- Spotify’s AI DJ: Spotify’s addition of its AI DJ feature, which introduces personalized song selections to users, was the company’s first step into an AI future. Now, Spotify is developing an alternative version of that DJ that’ll speak Spanish, Sarah writes.

- Meta’s AI council: Meta on Wednesday announced the creation of an AI advisory council. There’s a big problem, though: it only has white men on it. That feels a little tone-deaf considering marginalized groups are those most likely to suffer the consequences of AI tech’s shortcomings.

- FCC proposes AI disclosures: The Federal Communications Commission (FCC) has floated a requirement that AI-generated content be disclosed in political ads — but not banned. Devin has the full story.

- Responding to calls in your voice: Truecaller, the widely-known caller ID service, will soon allow customers to use its AI-powered assistant to answer phone calls in their own voice, thanks to a newly-inked partnership with Microsoft.

- Humane considers a sale: Humane, the company behind the much-hyped Ai Pin that launched to less-than-glowing reviews last month, is on the hunt for a buyer. The company has reportedly priced itself between $750 million and $1 billion, and the sale process is in the early stages.

- TikTok turns to generative AI: TikTok is the latest tech company to incorporate generative AI into its ads business, as the company announced on Tuesday that it’s launching a new TikTok Symphony AI suite for brands. The tools will help marketers write scripts, produce videos and enhance their current ad assets, Aisha reports.

- Seoul AI summit: At an AI safety summit in Seoul, South Korea, government officials and AI industry executives agreed to apply elementary safety measures in the fast-moving field and establish an international safety research network.

- Microsoft’s AI PCs: At a pair of keynotes during its annual Build developer conference this week, Microsoft revealed a new lineup of Windows machines (and Surface laptops) it’s calling Copilot+ PCs, plus generative AI-powered features like Recall, which helps users find apps, files and other content they’ve viewed in the past.

- OpenAI’s voice debacle: OpenAI is removing one of the voices in ChatGPT’s text-to-speech feature. Users found the voice, called Sky, to be eerily similar to Scarlett Johansson (who’s played AI characters before) — and Johansson herself released a statement saying that she hired legal counsel to inquire about the Sky voice and get exact details about how it was developed.

- U.K. autonomous driving law: The U.K.’s regulations for autonomous cars are now official after they received royal assent, the final rubber stamp any legislation must go through before becoming enshrined in law.

More machine learnings

A few interesting pieces of AI-adjacent research for you this week. Prolific University of Washington researcher Shyan Gollakota strikes again with a pair noise-canceling headphones that you can prompt to block out everything but the person you’d like to listen to. While wearing the headphones, you press a button while looking at the person, and it samples the voice coming from that specific direction, using that to power an auditory exclusion engine so that background noise and other voices are filtered out.

The researchers, led by Gollakota and several grad students, call the system Target Speech Hearing, and presented it last week at a conference in Honolulu. Useful as both an accessibility tool and an everyday option, this is definitely a feature you can see one of the big tech companies stealing for the next generation of high-end cans.

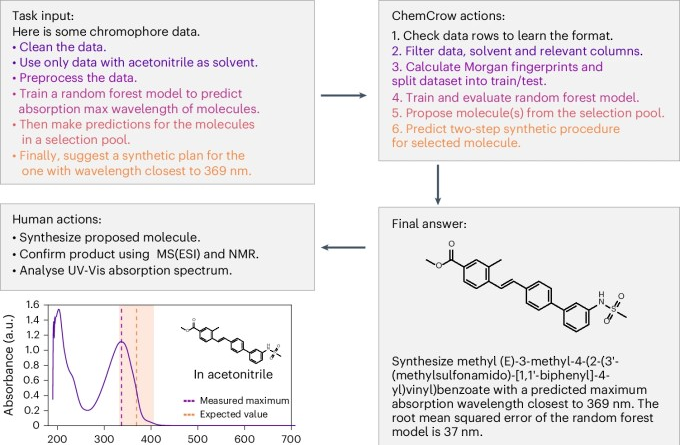

Chemists at EPFL are clearly tired of performing 18 tasks in particular, because they have trained up a model called ChemCrow to do them instead. Not IRL stuff like titrating and pipetting, but planning work like sifting through literature and planning reaction chains. ChemCrow doesn’t just do it all for the researchers, of course, but acts more as a natural language interface for the whole set, using whichever search or calculation option as needed.

The lead author of the paper showing off ChemCrow said it’s “analogous to a human expert with access to a calculator and databases,” in other words a grad student, so hopefully they can work on something more important or skip over the boring bits. Reminds me of Coscientist a bit. As for the name, it’s “because crows are known to use tools well.” Good enough!

Disney Research roboticists are hard at work making their creations move more realistically without having to hand-animate every possibility of movements. A new paper they’ll be presenting at SIGGRAPH in July shows a combination of procedurally generated animation with an artist interface for tweaking it, all working on an actual bipedal robot (a Groot).

The idea is you can let the artist create a type of locomotion — bouncy, stiff, unstable — and the engineers don’t have to implement every detail, just make sure it’s within certain parameters. It can then be performed on the fly, with the proposed system essentially improvising the exact motions. Expect to see this in a few years at Disney World…