Flow claims it can 100x any CPU’s power with its companion chip and some elbow grease

A Finnish startup called Flow Computing is making one of the wildest claims ever heard in silicon engineering: by adding its proprietary companion chip, any CPU can instantly double its performance, increasing to as much as 100x with software tweaks.

If it works, it could help the industry keep up with the insatiable compute demand of AI makers.

Flow is a spinout of VTT, a Finland state-backed research organization that’s a bit like a national lab. The chip technology it’s commercializing, which it has branded the Parallel Processing Unit, is the result of research performed at that lab (though VTT is an investor, the IP is owned by Flow).

The claim, Flow is first to admit, is laughable on its face. You can’t just magically squeeze extra performance out of CPUs across architectures and code bases. If so, Intel or AMD or whoever would have done it years ago.

But Flow has been working on something that has been theoretically possible — it’s just that no one has been able to pull it off.

Central Processing Units have come a long way since the early days of vacuum tubes and punch cards, but in some fundamental ways they’re still the same. Their primary limitation is that as serial rather than parallel processors, they can only do one thing at a time. Of course, they switch that thing a billion times a second across multiple cores and pathways — but these are all ways of accommodating the single-lane nature of the CPU. (A GPU, in contrast, does many related calculations at once but is specialized in certain operations.)

“The CPU is the weakest link in computing,” said Flow co-founder and CEO Timo Valtonen. “It’s not up to its task, and this will need to change.”

CPUs have gotten very fast, but even with nanosecond level responsiveness, there’s a tremendous amount of waste in how instructions are carried out simply because of the basic limitation that one task needs to finish before the next one starts. (I’m simplifying here, not being a chip engineer myself.)

What Flow claims to have done is remove this limitation, turning the CPU from a one-lane street into a multi-lane highway. The CPU is still limited to doing one task at a time, but Flow’s PPU, as they call it, essentially performs nanosecond-scale traffic management on-die to move tasks into and out of the processor faster than has previously been possible.

Think of the CPU as a chef working in a kitchen. The chef can only work so fast, but what if that person had a superhuman assistant swapping knives and tools in and out of the chef’s hands, clearing the prepared food and putting in new ingredients, removing all tasks that aren’t actual chef stuff? The chef still only has two hands, but now the chef can work ten times as fast.

It’s not a perfect analogy, but it gives you an idea of what’s happening here, at least according to Flow’s internal tests and demos with the industry (and they are talking with everyone). The PPU doesn’t increase the clock frequency or push the system in other ways that would lead to extra heat or power; in other words, the chef is not being asked to chop twice as fast. It just more efficiently uses the CPU cycles that are already taking place.

This type of thing isn’t brand new, says Valtonen. “This has been studied and discussed in high level academia. You can already do parallelization, but it breaks legacy code, and then it’s useless.”

So it could be done. It just couldn’t be done without rewriting all the code in the world from the ground up, which kind of makes it a non-starter. A similar problem was solved by another Nordic compute company, ZeroPoint, which achieved high levels of memory compression while keeping data transparency with the rest of the system.

Flow’s big achievement, in other words, isn’t high-speed traffic management, but rather doing it without having to modify any code on any CPU or architecture that it has tested. It sounds kind of unhinged to say that arbitrary code can be executed twice as fast on any chip with no modification beyond integrating the PPU with the die.

Therein lies the primary challenge to Flow’s success as a business: unlike a software product, Flow’s tech needs to be included at the chip design level, meaning it doesn’t work retroactively, and the first chip with a PPU would necessarily be quite a ways down the road. Flow has shown that the tech works in FPGA-based test setups, but chipmakers would have to commit quite a lot of resources to see the gains in question.

The scale of those gains, and the fact that CPU improvements have been iterative and fractional over the last few years, may well have those chipmakers knocking on Flow’s door rather urgently, though. If you can really double your performance in one generation with one layout change, that’s a no-brainer.

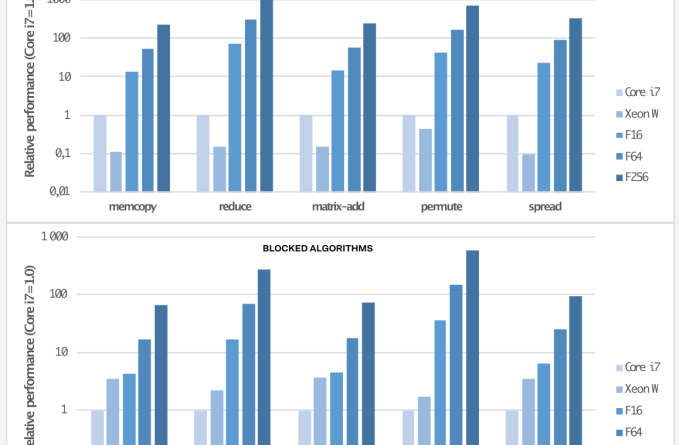

Further performance gains come from refactoring and recompiling software to work better with the PPU-CPU combo. Flow says it has seen increases up to 100x with code that’s been modified (though not necessarily fully rewritten) to take advantage of its technology. The company is working on offering recompilation tools to make this task simpler for software makers who want to optimize for Flow-enabled chips.

Analyst Kevin Krewell from Tirias Research, who was briefed on Flow’s tech and referred to as an outside perspective on these matters, was more worried about industry uptake than the fundamentals.

He pointed out, quite rightly, that AI acceleration is the biggest market right now, something that can be targeted for with special silicon like Nvidia’s popular H100. Though a PPU-accelerated CPU would lead to gains across the board, chipmakers might not want to rock the boat too hard. And there’s simply the question of whether those companies are willing to invest significant resources into a largely unproven technology when they likely have a five-year plan that would be upset by that choice.

Will Flow’s tech become a must-have component for every chipmaker out there, catapulting it to fortune and prominence? Or will penny-pinching chipmakers decide to stay the course and keep extracting rent from the steadily growing compute market? Probably somewhere in between — but it is telling that, even if Flow has achieved a major engineering feat here, like all startups, the future of the company depends on its customers.

Flow is just now emerging from stealth, with €4 million (about $4.3 million) in pre-seed funding led by Butterfly Ventures, with participation from FOV Ventures, Sarsia, Stephen Industries, Superhero Capital and Business Finland.