The world needs more electricity—but don’t blame AI, Microsoft president Brad Smith says

Hello and welcome to Eye on AI. In this edition: Microsoft President Brad Smith on AI’s power demands; the U.S. imposes new export controls on chipmaking technology; a new milestone for distributed AI training; and are LLMs hitting a wall? It’s complicated.

I grew up watching reruns of the original Star Trek series. As anyone who’s ever seen that show will know, there was a moment in seemingly every episode when the starship Enterprise would get into some kind of jam and need to outrun a pursuing alien spacecraft, or escape the powerful gravitational field of some hostile planet, or elude the tractor beam of some wily foe.

In every case, Captain Kirk would get on the intercom to the engine room and tell Scotty, the chief engineer, “we need more power.” And, despite Scotty’s protestations that the engine plant was already producing as much power as it possibly could without melting down, and despite occasional malfunctions that would add dramatic tension, Scotty and the engineering team would inevitably pull through and deliver the power the Enterprise needed to escape danger.

AI, it seems, is entering its “Scotty, we need more power” phase. In an interview the Financial Times published this week, two senior OpenAI executives said the company planned to build its own data centers in the U.S. Midwest and Southwest. This comes amid reports that there have been tensions with its large investor and strategic partner, Microsoft, over access to enough computing resources to keep training its advanced AI models. It also comes amid reports that OpenAI wants to construct data centers so large that they would each consume five gigawatts of power annually—more than the electricity demands of the entire city of Miami. (It’s unclear if the data centers the two OpenAI execs mentioned to the FT would be ones of such extraordinary size.)

Microsoft has plans for large new data centers of its own, as do Google, Amazon’s AWS, Meta, Elon Musk’s X AI, and more. All this data center construction has raised serious concerns about where all the electricity needed to power these massive AI supercomputing clusters will come from. In many cases, the answer seems to be nuclear power—including a new generation of largely unproven small nuclear reactors, which might be dedicated to powering just a single data center—which carries risks of its own.

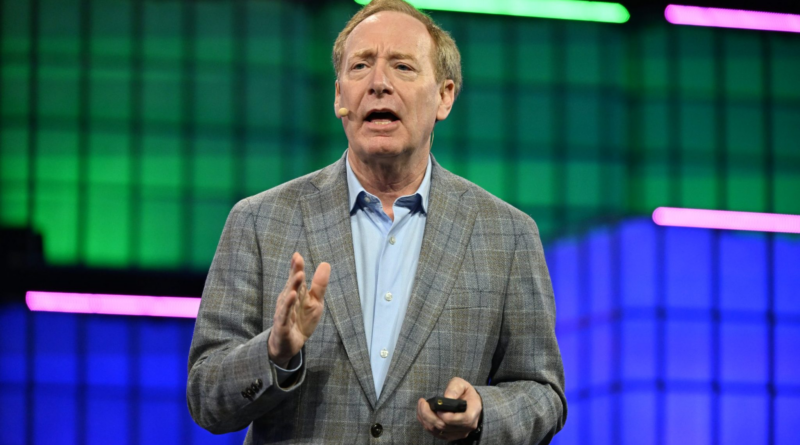

On the sidelines of Web Summit in Lisbon a few weeks ago, I sat down with Microsoft President Brad Smith. Our conversation covered a variety of topics, but much of it focused on AI’s seemingly insatiable demand for energy. As I mentioned in Eye on AI last Tuesday, some people at Web Summit, including Sarah Myers West of the AI Now Institute—who was among the panelists on a main stage session I moderated debating “Is the AI bubble about to burst?”—argued that energy demands of today’s large language model-based AI systems are far too great. We’d all be better off as a planet, she argued, if AI were a bubble and it burst—and we moved on to some more energy efficient kind of AI technology.

Well, needless to say, Smith didn’t exactly agree with that position. He was keen to point out that AI is hardly the only reason the U.S. needs to upgrade its electricity generating capacity and, just as critically, its electrical grid infrastructure.

He noted that the U.S. growth of energy generation capacity had only been in the low single digits annually over the past two decades. This was more than sufficient to keep pace with demand growth of about 0.5% per year during this period. But now electrical consumption is rising as much as 2% per year—and while AI is among the drivers of this growth, it is not the principal one. Data center computing of all kinds, not just AI, is a factor. But more important is the growth in electric vehicles and the electricity demands of a resurgent U.S. manufacturing sector.

AI currently constitutes about 3.5% of net energy demand in the U.S. and might increase to 7% to 8% by decade’s end, Smith said. Even if it hit 15% of energy demand between then and 2070, that would still mean that 85% of the demand was coming from other sources.

“The world needs more electricity and it will take a lot of work on a global basis to accommodate that,” Smith said.

Smith said that Microsoft’s discussions with the Biden Administration over the past year have been about streamlining the federal permitting process for new data centers and the conversion of existing brownfield sites. Contrary to common perceptions, he said, it is not local government permitting or “not in my backyard” (NIMBY) objections from local communities that pose the biggest headaches for tech companies looking to build data centers or power companies trying to upgrade the grid. Obtaining local permissions usually takes about nine months, he said. A far bigger problem is the federal government. Smith said obtaining a single Army Corps of Engineers wetlands permit takes 20 months on average, significantly slowing construction plans. He said he was optimistic that the incoming Trump Administration would clear away some of this red tape.

Smith said Microsoft would be thrilled if Trump appointed North Dakota Gov. Doug Burgum—who, for a time in the early 2000s, headed Microsoft’s Business Solutions division after he sold a company he founded to the tech giant—as energy czar. That didn’t exactly happen. Trump later nominated oil industry veteran Chris Wright to lead the Department of Energy, while tapping Burgum to be the Secretary of the Interior. Still Burgum may exercise considerable influence on U.S. energy policy from that position.

Smith also highlighted that another impediment to upgrading America’s electrical grid is a lack of trained electricians to work on high voltage transmission lines and transformers. The U.S., he said, needed to fill a projected deficit of 500,000 electricians in the next eight years. The country needed to re-emphasize vocational training in high school and in community colleges. And he said Microsoft hoped to work with unions to help improve apprenticeship opportunities for those who wanted to train for the field.

There’s been a lot of discussion about AI’s carbon footprint, although this is often misunderstood. In the U.S., the electricity used to train and run AI models often comes from renewable sources or carbon-free nuclear power. But the power demands of AI are sometimes now causing cloud computing companies to buy up so much renewable capacity that other industries are forced to rely on fossil fuel-based generation. What’s more, the process of manufacturing computer chips for AI applications, as well as the manufacture of the concrete and steel that goes into building data centers, contribute significantly to the overall carbon footprint of AI technology. Microsoft is among the tech companies that have said their plans to be carbon neutral by 2030 have been thrown off course due to their expanding data center infrastructure.

Smith said Microsoft was focused on investing in technologies that would enable it to produce lower emissions by 2030—and was also investing heavily in technologies, such as carbon capture and storage, that actually remove carbon dioxide from the environment. He noted that this contrasted to some other technology companies that are relying more heavily on buying carbon offsetting credits to reach their net zero goals. (Smith didn’t say it, but its competitor Meta has been criticized for leaning on carbon credits rather than taking steps to directly reduce the carbon footprint of its data centers.)

It’s unclear if the AI industry will, like good old Captain Kirk, get the energy it needs to save its own enterprise. And it’s not entirely clear that we, as citizens of Earth, should be rooting for them. What is clear, however, is that it’s going to be a drama worth tuning in for.

With that, here’s more AI news.

Jeremy Kahn

jeremy.kahn@fortune.com

@jeremyakahn

**Before we get the news: Last chance to join me in San Francisco next week for the Fortune Brainstorm AI conference! If you want to learn more about what’s next in AI and how your company can derive ROI from the technology, Fortune Brainstorm AI is the place to do it. We’ll hear about the future of Amazon Alexa from Rohit Prasad, the company’s senior vice president and head scientist, artificial general intelligence; we’ll learn about the future of generative AI search at Google from Liz Reid, Google’s vice president, search; and about the shape of AI to come from Christopher Young, Microsoft’s executive vice president of business development, strategy, and ventures; and we’ll hear from former San Francisco 49er Colin Kaepernick about his company Lumi and AI’s impact on the creator economy. The conference is Dec. 9-10 at the St. Regis Hotel in San Francisco. You can view the agenda and apply to attend here. (And remember, if you write the code KAHN20 in the “Additional comments” section of the registration page, you’ll get 20% off the ticket price—a nice reward for being a loyal Eye on AI reader!)

AI IN THE NEWS

Intel CEO Pat Gelsinger suddenly retires. He had lost the confidence of the board, which last week told him to either retire immediately or be fired, according to Bloomberg. Gelsinger, who had spent most of his career at Intel before serving as CEO as VMWare, returned to the troubled chipmaker in 2021 to try to turn the company around following production snafus. But under his leadership Intel has continued to stumble, failing to gain market share in the specialized chips for AI applications, while embarking on a plan to build new, cutting-edge chip foundries so capital intensive it risked bankrupting the company. Intel chief financial officer David Zinsner and executive vice president Michelle Johnston Holthaus are serving as interim co-CEOs while the board searches for Gelsinger’s replacement.

U.S. imposes more export controls on the sale of chipmaking equipment to China.

The move, announced by the U.S. Commerce Secretary Gina Raimondo, is designed to hobble China’s ability to build military AI applications. The restrictions target 24 types of advanced chipmaking tools and high-bandwidth memory components that are also critical to AI chips. The new rules have extraterritorial reach too, extending to non-U.S. companies that use any U.S.-made tech, which is most equipment vendors. Allies like Japan and the Netherlands secured exemptions, after agreeing to apply their own additional export controls, but some critics questioned why only some Huawei chipmaking plants were on the new export control list and why CXMT, a Chinese producer of high-bandwidth memory, was also not targeted. You can read more in the Financial Times here.

OpenAI ponders advertising revenue and 1 billion users. OpenAI is exploring the possibility of introducing advertising to its AI products as it seeks to bolster revenue amid high costs for developing advanced models. Sarah Friar, the company’s chief financial officer, told the Financial Times that the company has no active plans to introduce ads, but was open to the idea. OpenAI has hired advertising talent from tech giants like Meta and Google and pointed to the advertising expertise of executives like Kevin Weil. The company’s annualized revenues have reached $4 billion, driven by enterprise customers of its API and paid users of its subscription-based ChatGPT, but its steep R&D expenses, including computing costs and salaries, are projected to surpass $5 billion annually.

EYE ON AI RESEARCH

Another distributed training milestone is overcome. There’s increasing interest in whether AI systems can be trained in a fully distributed way. That would mean that remotely-located computers— perhaps even just home laptops that increasingly have a graphics processing chip (GPU) embedded in them—are yoked together to train an AI model, rather than having to use a cluster of GPUs in a data center. Now AI startup Prime Intellect has trained a 10 billion parameter LLM called Intellect-1 in a completely distributed way. While much smaller than most cutting-edge frontier models—which can run to hundreds of billions of parameters—this is still 10-times larger than any other model trained this way. The distributed training is slower and less efficient than data center training and the resulting model does okay, but not stellar, on a slate of benchmark tests. Still, just as peer-to-peer file training posed a significant challenge to copyright, distributed AI training undermines the ability of governments to impose effective regulations over the creation and use of advanced AI models. You can read Prime Intellect’s blog on Intellect-1 here.

FORTUNE ON AI

Three charts explaining where the AI frenzy is right now —by Allie Garfinkle

Elon Musk is ratcheting up his attacks on his old partner Sam Altman, calling him ‘Swindly Sam’ and OpenAI a ‘market-paralyzing gorgon’ —by Marco Quiroz-Gutierrez

The new ‘land grab’ for AI companies, from Meta to OpenAI, is military contracts —by Kali Hays

Commentary: Getty Images CEO: Respecting fair use rules won’t prevent AI from curing cancer —by Craig Peters

AI CALENDAR

Dec. 2-6: AWS re:Invent, Las Vegas

Dec. 8-12: Neural Information Processing Systems (Neurips) 2024, Vancouver, British Columbia

Dec. 9-10: Fortune Brainstorm AI, San Francisco (register here)

Dec. 10-15: NeurlPS, Vancouver

Jan. 7-10: CES, Las Vegas

Jan. 20-25: World Economic Forum. Davos, Switzerland

BRAIN FOOD

Are LLMs hitting a wall? Kind of, sort of, it depends—says one leading AI entrepreneur. Nathan Benaich, whose solo general partner venture firm Air Street Capital is among the leading seed investors in AI companies, recently published a podcast in which he interviewed Eiso Kant, the cofounder and chief technology officer at Poolside, one of the many startups building an AI-powered coding assistant. (Kant’s other cofounder is former GitHub CTO Jason Warner; Air Street is an investor in Poolside.) In the podcast, Benaich asked Kant about the idea that LLMs are hitting a wall. Benaich later posted that the “tl;dr” of Kant’s answer was: no. But it is worth actually listening to Kant’s entire answer, because I think a more accurate tl;dr would have been: kind of, sort of, it depends.

Kant said, basically, that the most general-purpose LLMs essentially are hitting a wall in terms of scaling. And that wall is data. These general purpose LLMs—having ingested the entire internet—are running out of good quality data on which to train, especially given the size of the models in terms of parameter count (the number of adjustable variables they have). We know from Google DeepMind’s seminal 2022 “Chinchilla” research paper that the larger the model, the more data it needs to train efficiently. But the cutting-edge models are so large that there simply isn’t enough real world data to train them to these “Chinchilla optimal” levels. Frontier AI labs have tried to overcome this deficit by using synthetic data—getting an AI model to generate data that looks like human-generated data. Yet this approach too has a problem in that it is difficult to match the quality and distribution of real world data. This mismatch can eventually lead to decreased model performance and ultimately, if synthetic data comes to dominate the training set, model collapse (where the AI model fails completely.)

But Kant said that when it came to Poolside’s mission of creating AI models that can build and maintain software, there was no wall. And that’s because when it comes to code, it is possible to validate the quality of any synthetic training data, because if it is not valid code, it will not compile correctly. So code, Kant seemed to argue, was perhaps a special case where the problems of synthetic data could be overcome.

You can listen to Kant’s conversation with Benaich here.