Adobe Firefly can now generate more realistic images

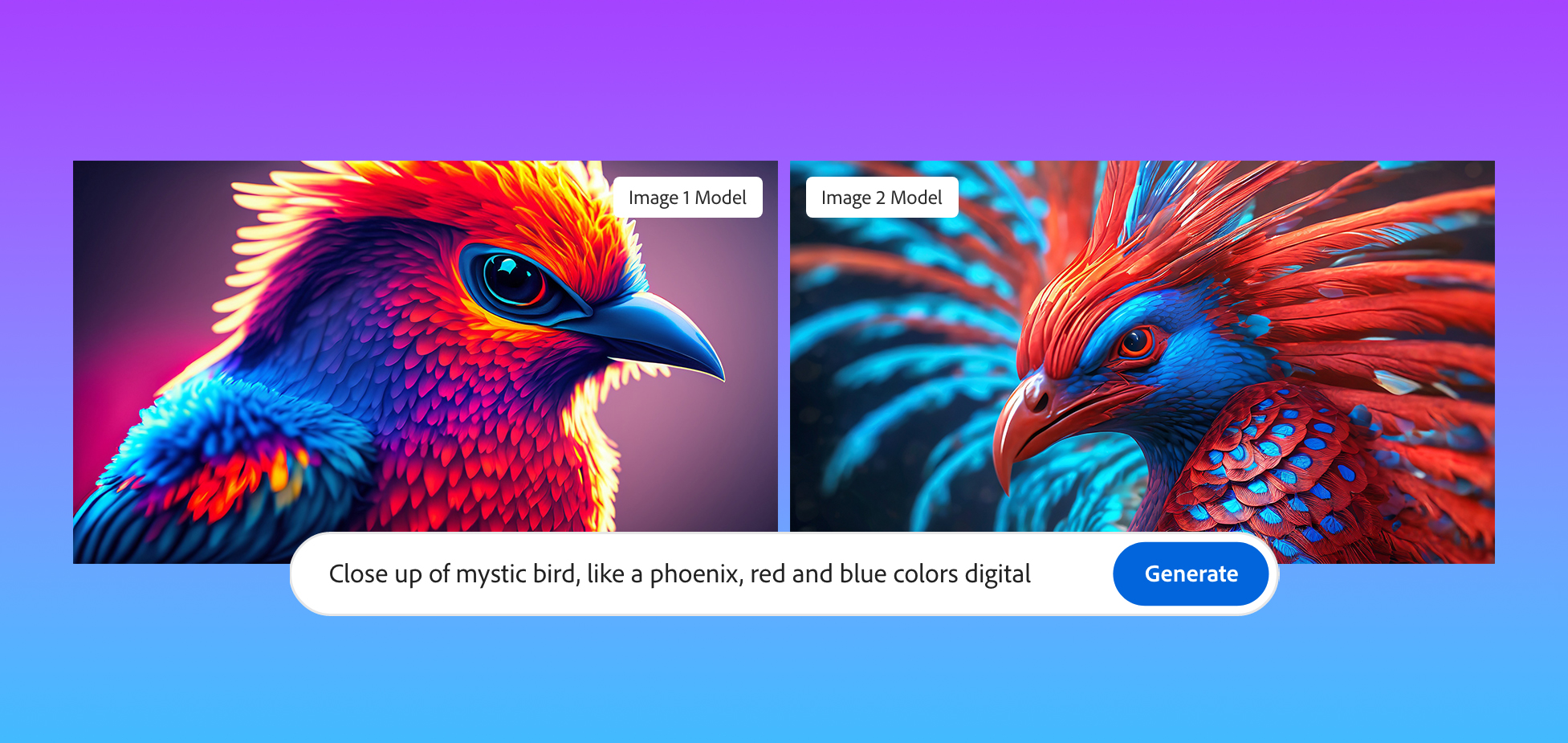

At MAX, its annual conference for creatives, Adobe today announced that it has updated the models that power Firefly, its generative AI image creation service. According to Adobe, the Firefly Image 2 Model, as it’s officially called, will be better at rendering humans, for example, including facial features, skin, body and hands (which have long vexed similar models).

Adobe also today announced that Firefly’s users have now generated three billion images since the service launched about half a year ago, with one billion generated last month alone. The vast majority of Firefly users (90%) are also net-new to Adobe’s products. The majority of these users surely use the Firefly web app, which helps explain why a few weeks ago, the company decided to turn what was essentially a demo site for Firefly into a full-fledged Creative Cloud service.

Alexandru Costin, Adobe’s VP for generative AI and Sensei, told me that the new model wasn’t just trained on more recent images from Adobe Stock and other commercially safe sources, but also that it is significantly larger. “Firefly is an ensemble of multiple models and I think we’ve increased their sizes by a factor of three,” he told me. “So it’s like a brain that’s three times larger and that will know how to make these connections and render more beautiful pixels, more beautiful details for the user.” The company also increased the dataset by almost a factor of two, which in turn should give the model a better understanding of what users are asking for.

That larger model is obviously more resource-intensive, but Costin noted that it should run at the same speed as the first model. “We’re continuing our explorations and investment in the distillation, pruning, optimization and quantization. There’s a lot of work going into making sure customers get a similar experience, but we don’t balloon the cloud costs too much.” Right now, though, Adobe’s focus is on quality over optimization.

For now, the new model will be available through the Firefly web app, but it will also come to Creative Cloud apps like Photoshop, where it powers popular features like generative fill, in the near future. That’s also something Costin stressed. The way Adobe thinks about generative AI isn’t so much about content creation but generative editing, he said.

“What we’ve seen our customers do, and this is why Photoshop generative fill is so successful, is not generating new assets only but it’s taking existing assets — a photo shoot, a product shoot — and then using generative capabilities to basically enhance existing workflows. So we’re calling our umbrella term for defining generative as more generative editing than just text-to-image because we think that’s more important for our customers.”

With this new model, Adobe is also introducing a few new controls in the Firefly web app that now allow users to set the depth of field for their images, as well as motion blur and field of view settings. Also new is the ability to upload an existing image and then have Firefly match the style of that image, as well as a new auto-complete feature for when you write your prompts (which Adobe says is optimized to help you get to a better image).